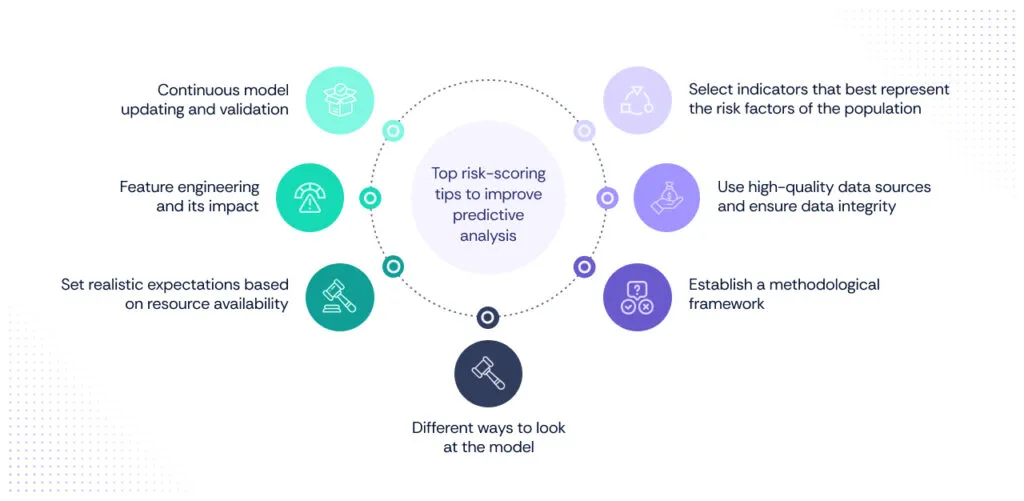

Top 7 risk-scoring tips to improve predictive analysis

Predictive analysis has become a cornerstone of modern business strategy, enabling organizations to anticipate future trends, identify potential risks, and make data-driven decisions.

What is a predictive analytics and how does it help? If one were to define predictive analytics, it is the practice of using data, statistical algorithms, and machine learning techniques to identify the likelihood of future outcomes based on historical data.

By leveraging statistical algorithms, machine learning techniques, and historical data, predictive analysis provides insights that help companies stay competitive and proactive in an ever-evolving market.

This blog aims to provide actionable tips to enhance your predictive analysis efforts. Whether you’re a data scientist, a business analyst, or a decision-maker, the insights shared here will help you improve the accuracy and reliability of your predictive models.

Tip 1: Select indicators that best represent the risk factors of the population

- Identifying key risk indicators: Selecting the right indicators is crucial for effective predictive analysis. Key Risk Indicators (KRIs) are metrics that help identify potential threats and opportunities within a given population. To select the most relevant indicators, consider factors such as industry trends, historical data, and specific business objectives. It’s important to choose indicators that are not only predictive but also actionable, providing clear insights into potential outcomes.

- Aligning indicators with business goals: Once you have identified potential indicators, the next step is to align them with your business goals. This alignment ensures that the predictive analysis will be relevant and useful for decision-making. For example, if your goal is to reduce customer churn, focus on indicators such as customer satisfaction scores, purchase frequency, and service interaction history. By aligning your indicators with your strategic objectives, you can create models that provide meaningful insights and drive business value.

Key indicators might include historical sales data, seasonality, marketing campaign effectiveness, and economic indicators. By aligning these indicators with business goals, such as optimizing inventory levels and improving sales forecasts, the company can make informed decisions that enhance profitability and customer satisfaction.

Tip 2: Use high-quality data sources and ensure data integrity

High-quality data is the bedrock of any successful predictive analysis. Poor data quality can lead to inaccurate predictions, which can have detrimental effects on decision-making processes. Ensuring that your data is accurate, complete, and relevant is essential for building reliable predictive models.

Collect high-quality data sources

To collect high-quality data, consider the following best practices:

- Source reliability: Use reputable sources for your data to ensure its credibility.

- Data cleaning: Regularly clean your data to remove duplicates, correct errors, and fill in missing values.

- Standardization: Standardize data formats to maintain consistency across datasets.

- Documentation: Maintain thorough documentation of your data sources, collection methods, and processing steps to ensure transparency and reproducibility.

Ensure data integrity

Data integrity involves maintaining the accuracy and consistency of data throughout its lifecycle.

Here are some strategies to ensure data integrity:

- Validation checks: Implement validation checks to detect and correct errors during data entry and processing.

- Regular audits: Conduct regular audits of your data to identify and rectify discrepancies.

- Access controls: Limit access to data to authorized personnel to prevent unauthorized modifications.

- Backup and recovery: Implement robust backup and recovery processes to protect against data loss.

Tip 3: Establish a methodological framework

A methodological framework provides a structured approach to predictive analysis, ensuring consistency, accuracy, and repeatability in your models. It guides the entire process, from data collection to model deployment, and helps in managing complex projects effectively.

Key components of a methodological framework

- Define objectives: Clearly define the objectives of your predictive analysis. What questions are you trying to answer? What outcomes do you hope to achieve?

- Data preparation: Prepare your data by cleaning, transforming, and normalizing it to ensure it is suitable for analysis.

- Model selection: Choose appropriate modeling techniques based on your data and objectives. Common techniques include regression analysis, decision trees, and neural networks.

- Training and validation: Split your data into training and validation sets. Train your model on one set and validate its performance on the other to ensure it generalizes well to new data.

- Evaluation metrics: Define metrics to evaluate your model’s performance. Common metrics include accuracy, precision, recall, and F1 score.

- Model tuning: Fine-tune your model parameters to optimize performance. This may involve adjusting hyperparameters, feature selection, or employing ensemble methods.

- Deployment and monitoring: Deploy your model in a production environment and continuously monitor its performance. Make adjustments as necessary to maintain accuracy.

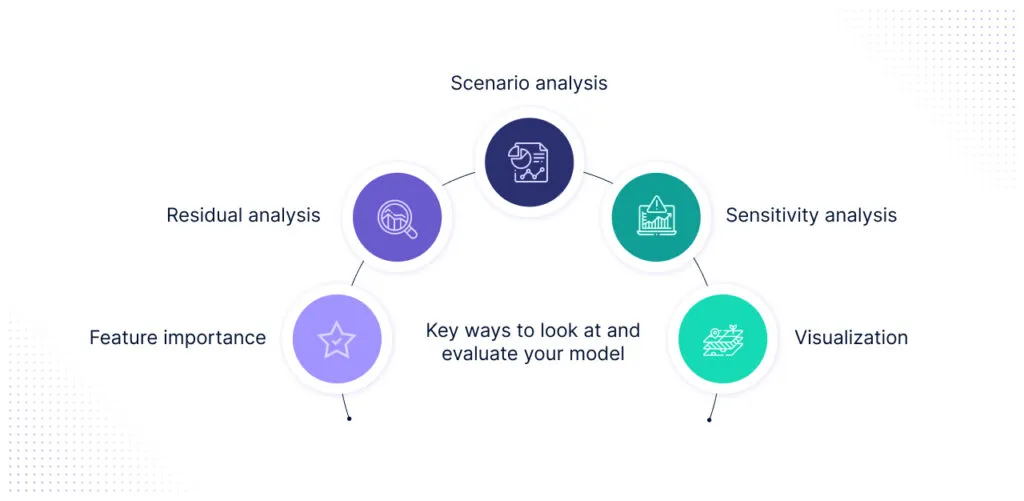

Tip 4: Understand different ways to look at the model

Understanding and interpreting your predictive model from multiple perspectives is vital for gaining comprehensive insights.

Here are key ways to look at and evaluate your model:

- Feature importance: Identify which features (variables) have the most significant impact on your predictions. This understanding helps refine your model and focus on the most critical data points.

- Residual analysis: Analyze the residuals (the differences between observed and predicted values) to detect patterns or biases in your model. Residual analysis helps in diagnosing issues and improving model accuracy.

- Scenario analysis: Run different scenarios through your model to see how it behaves under various conditions. This analysis helps in understanding the model’s robustness and reliability.

- Sensitivity analysis: Assess how changes in input variables affect the output predictions. Sensitivity analysis identifies which variables have the most influence on the model’s outcomes, helping in better decision-making.

- Visualization: Use graphical representations such as scatter plots, heatmaps, and decision trees to visualize your model’s predictions and underlying patterns. Visualization makes it easier to communicate insights and findings to stakeholders.

By establishing a robust methodological framework and understanding different ways to look at your model, you can significantly enhance the effectiveness and accuracy of your predictive analysis. These steps provide a structured approach to predictive modeling, ensuring that your predictions are reliable and actionable.

Tip 5: Set realistic expectations based on resource availability

Effective predictive analysis starts with a clear understanding of your available resources. These resources include time, budget, technology, and personnel. By assessing these factors upfront, you can set realistic goals and avoid overextending your team.

- Time: Evaluate the time required for data collection, preprocessing, model training, and validation. Consider project deadlines and allocate time for each phase accordingly.

- Budget: Determine the financial resources available for your predictive analysis project. This includes predictive analysis software licenses, cloud computing costs, and potential external consultancy fees.

- Technology: Assess the predictive analysis tools and technologies at your disposal. Do you have access to the necessary predictive analysis software and hardware to perform high-quality predictive analysis?

- Personnel: Evaluate the skills and experience of your team. Ensure you have the right mix of data scientists, analysts, and domain experts to execute the project effectively.

Tip 6: Feature engineering and its impact

Feature engineering is the process of using domain knowledge to create features (input variables) that make machine learning algorithms work more effectively. It is a critical step in predictive analysis, as the quality of features directly impacts the performance of your model.

- Enhancing predictive power: Well-engineered features can significantly improve the accuracy and reliability of your predictive models.

- Reducing complexity: Effective feature engineering can simplify models, making them easier to interpret and less prone to overfitting.

- Highlighting key patterns: By transforming raw data into meaningful features, you can uncover hidden patterns that may not be apparent otherwise.

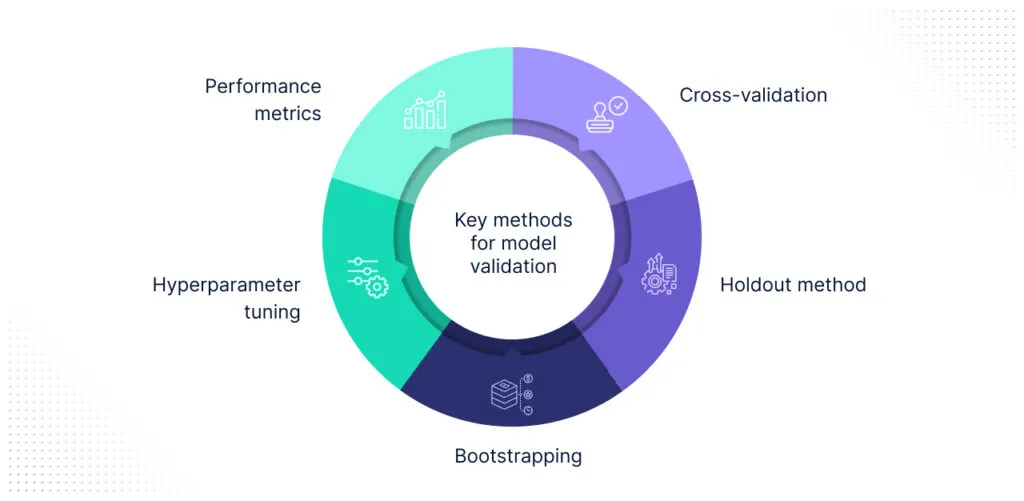

Tip 7: Continuous model updating and validation

Predictive models are not static; they need regular updates to remain accurate and relevant. The underlying data and external conditions may change over time, necessitating continuous model refinement.

- Adapting to change: Regular updates ensure your model adapts to new data trends, maintaining its predictive accuracy.

- Preventing stale models: Without updates, models can become outdated and less effective, leading to poor decision-making.

Methods for model validation

Validating your predictive model is essential to ensure it performs well on new, unseen data.

Here are key methods for model validation:

- Cross-validation: Split your data into multiple subsets and train/test the model on different combinations of these subsets. This method helps in assessing the model’s performance more robustly.

- Holdout method: Reserve a portion of the data for testing the model after training it on the remaining data. This provides a straightforward way to evaluate model performance.

- Bootstrapping: Use random sampling with replacement to create multiple training sets. Train the model on these sets to estimate its accuracy and robustness.

- Hyperparameter tuning: Adjust the model’s hyperparameters to optimize its performance. Use techniques like grid search or random search to find the best combination of hyperparameters.

- Performance metrics: Continuously monitor performance metrics such as accuracy, precision, recall, F1 score, and mean squared error. Regularly validate the model against these metrics to ensure it meets the desired performance standards.

By setting realistic expectations based on resource availability, focusing on effective feature engineering, and ensuring continuous model updating and validation, you can significantly enhance the quality and reliability of your predictive analysis. These steps are crucial for building robust models that deliver actionable insights and drive better decision-making.

Best practices for selecting predictive models

1. Select the right predictive model

Selecting the right predictive model is critical to achieving accurate and actionable insights. Here are key criteria to consider:

- Data characteristics: Evaluate the size, type, and distribution of your data. Some models perform better with large datasets, while others excel with smaller, more structured data.

- Model complexity: Consider the complexity of the model relative to your problem. Simple models like linear regression are easier to interpret, while complex models like neural networks can capture intricate patterns but are harder to understand.

- Performance metrics: Determine the performance metrics that are most important for your application (e.g., accuracy, precision, recall, F1 score). Choose a model that optimizes these metrics.

- Scalability: Assess whether the model can scale with your data. Ensure it can handle increasing data volumes without a significant drop in performance.

- Interpretability: Depending on your use case, the interpretability of the model might be crucial. Decision trees, for example, are more interpretable than deep learning models.

- Computational efficiency: Evaluate the computational resources required to train and deploy the model. Ensure that your infrastructure can support these requirements.

2. Compare different predictive models

Once you have identified your criteria, compare different predictive models to determine which one best meets your needs. Here are common models to consider:

- Linear regression: Ideal for predicting continuous outcomes and understanding relationships between variables.

- Logistic regression: Suitable for binary classification problems, offering interpretable results.

- Decision trees: Provide high interpretability and are useful for both classification and regression tasks.

- Random forests: An ensemble method that enhances decision tree performance by reducing overfitting and improving accuracy.

- Support Vector Machines (SVM): Effective for high-dimensional spaces and various classification tasks.

- Neural networks: Powerful for complex tasks such as image and speech recognition but require significant computational resources.

- K-Nearest Neighbors (KNN): Simple and effective for small datasets with a clear structure.

Conclusion

Enhancing predictive analysis requires selecting the right models, ensuring data quality, and ongoing refinement. By adopting best practices and learning from industry leaders, you can develop robust predictive models that drive better decision-making and business outcomes.

At Scrut, we provide advanced predictive analysis tools and expert guidance to boost your predictive analysis efforts. Whether starting from scratch or refining existing models, Scrut can help.

Contact us today to learn more about how we can support your journey toward more accurate and actionable predictive analytics.

Frequently Asked Questions

1. What are some common methods to improve the accuracy of predictive analysis?

Improving accuracy involves using more advanced algorithms, ensuring data quality, feature engineering, and cross-validation techniques. Leveraging ensemble methods and regularly updating models with new data also help.

2. How does data quality impact predictive analysis?

High-quality data is crucial for accurate predictive analysis. Poor data quality can lead to incorrect predictions. Ensuring data is clean, complete, and relevant is essential for building reliable models.

3. What role does feature engineering play in predictive analysis?

Feature engineering involves creating new input features from existing data to improve model performance. It helps in uncovering hidden patterns and relationships in the data, leading to more accurate predictions.

4. How can businesses ensure their predictive models remain relevant over time?

Businesses should continuously monitor and update their predictive models to reflect changes in underlying data patterns. Implementing automated retraining and validation processes can help keep models current and effective.

5. What are some best practices for selecting the right predictive model?

Selecting the right predictive model involves understanding the problem domain, evaluating multiple models, considering the trade-offs between complexity and interpretability, and using techniques like cross-validation to compare performance.