Leveraging Generative AI for Streamlined Compliance

Not long ago, artificial intelligence (AI) was considered the stuff of sci-fi, something extraordinary and futuristic. Today, AI has become an everyday tool employed by businesses and organizations across various industries. One particularly significant application of AI is in the realm of Governance, Risk Management, and Compliance (GRC).

The use of generative AI tools has transformed the way businesses approach GRC. This technology promises to revolutionize the way organizations manage and mitigate risks, ensure compliance with regulations, and enhance their overall governance practices.

In this blog, we will dive deep into generative AI and explore its potential to boost GRC efforts, examining its applications, benefits, and challenges.

What is generative AI?

Generative AI refers to a subset of artificial intelligence that focuses on the creation, generation, or production of data, content, or other information.

Unlike traditional AI systems that are primarily designed for tasks such as data analysis, classification, and decision-making, generative AI is specialized in generating new and original content, often in the form of text, images, audio, or other data types.

Generative AI vs Predictive AI

Generative AI should not be confused with predictive AI. Generative AI is designed to create entirely new content, such as text, images, or music, by learning patterns from existing data and generating novel output.

In contrast, predictive AI uses historical data to make informed predictions about future events or outcomes. It analyzes patterns in past data to forecast future events, which is useful in applications like sales forecasting and weather prediction.

While both types of AI have their unique applications, generative AI focuses on creativity and content generation, while predictive AI is geared toward making predictions based on existing data.

Why should GRC teams use generative AI?

Think GRC, and you might just picture boring paperwork and tiresome audits. It’s little surprise that companies would choose to use technology to reduce this burden. In this context, Generative AI emerges as a game-changer for GRC teams.

One compelling reason for GRC teams to embrace Generative AI is its transformative potential in handling the often arduous tasks of documentation and reporting. It can automate and streamline the creation of compliance reports, risk assessments, and policy documents, saving valuable time and resources.

The benefits don’t stop there. Generative AI can significantly enhance the precision and consistency of these critical documents, reducing the risk of errors that could have serious implications for compliance and risk management.

Moreover, it empowers GRC teams to swiftly analyze vast amounts of data from various sources, aiding in the identification of potential compliance issues and emerging risks. By staying ahead of the regulatory curve through continuous monitoring and summarization of changes, Generative AI ensures that GRC teams remain agile and well-prepared in an ever-evolving business landscape.

Embracing Generative AI is not just a technological upgrade; it’s a strategic move that elevates the efficiency, accuracy, and adaptability of GRC practices.

How can GRC teams use generative AI?

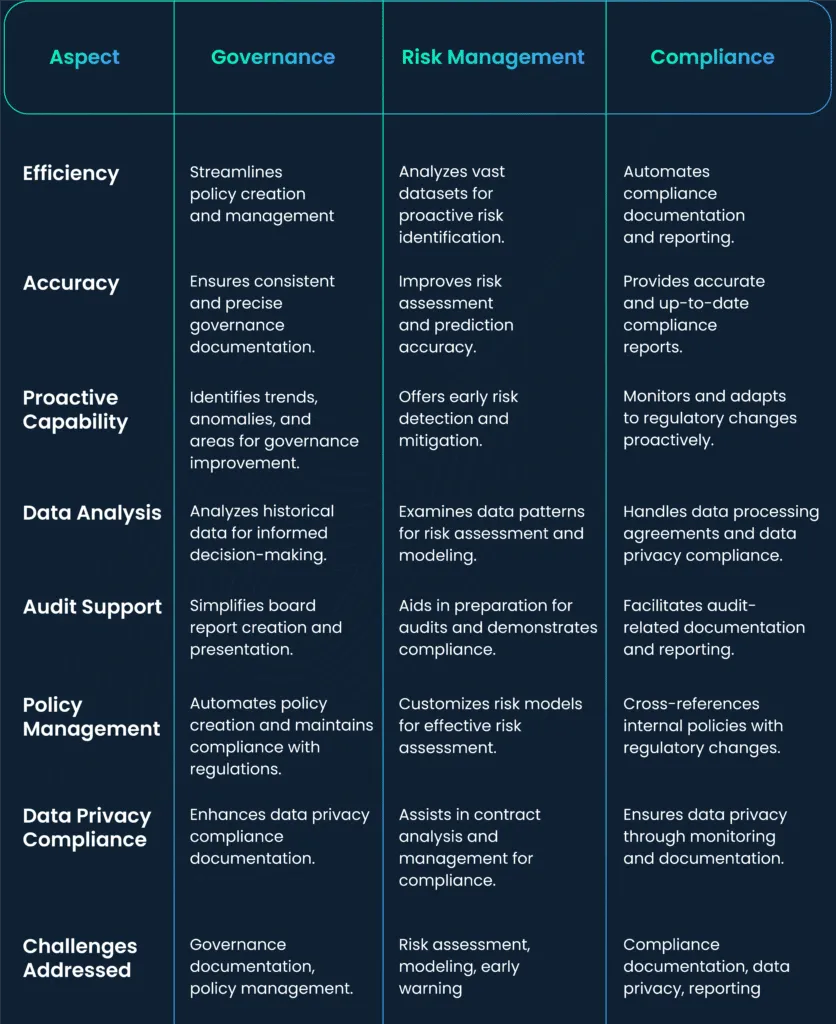

Generative AI can be used to boost all three facets of GRC: Governance, Risk, and Compliance. From streamlining the creation of compliance reports and automating the documentation of governance practices to proactively identifying emerging risks, generative AI is a multifaceted solution.

Let’s explore its versatile applications in detail and understand how it can drive better governance, mitigate risks, and ensure compliance within an organization.

How does generative AI boost governance?

Generative AI plays a crucial role in enhancing governance within the GRC realm . Here are several ways in which it aids in governance:

1. Automated documentation

Generative AI tools can automate the creation of governance documents, such as board reports, policy manuals, and governance guidelines. This not only saves time but also ensures that these documents are consistently produced with high precision, maintaining governance standards.

2. Policy management

Generative AI can assist in managing and updating internal policies and procedures in line with regulatory changes. It helps in maintaining policy consistency throughout the organization, ensuring that governance practices are aligned with the latest standards.

3. Regulatory compliance

Generative AI models can continuously monitor and summarize regulatory changes, ensuring that governance practices are always in compliance with the latest regulations. It helps organizations stay ahead of compliance requirements and adjust governance strategies accordingly.

4. Decision support

Generative AI can analyze historical data to provide insights for better decision-making in governance. It assists in identifying trends, anomalies, and potential areas for improvement, enabling governance boards to make informed decisions.

5. Board reporting

Generative AI tools can simplify the process of creating board reports by automatically extracting relevant information from various data sources and generating concise and informative reports for board members. This streamlines the reporting process and ensures that boards have access to accurate and up-to-date information.

6. Data privacy compliance

For organizations dealing with data privacy regulations, such as GDPR, generative AI can help in drafting and updating data processing agreements and other privacy-related documents, ensuring governance practices align with data protection laws.

How does generative AI enhance risk management?

Generative AI can significantly enhance risk management efforts in GRC. It empowers organizations to make more informed decisions and take proactive measures to mitigate risks effectively. Here’s how generative AI aids in risk management.

An IBM report suggests that as GRC analytics progress, Operational Risk (OpRisk) quantification, especially in the realm of cyber risk, is poised for growth, driven by the rapid prominence of advanced AI methodologies like machine learning.

1. Data analysis and predictive modeling

Generative AI models can analyze vast datasets, historical records, and market trends to identify potential risks and vulnerabilities. By recognizing patterns and anomalies in data, it helps GRC teams make more informed risk assessments and predictions, allowing for proactive risk management strategies.

2. Risk modeling

It can assist in building sophisticated risk models. These models take into account various factors and variables, providing a more comprehensive view of potential risks. It allows organizations to simulate and assess the impact of different risk scenarios, helping in risk mitigation planning.

3. Early warning systems

Generative AI can continuously monitor data from internal and external sources to create early warning systems for emerging risks. By providing timely alerts and insights, it enables GRC teams to address potential issues before they escalate.

4. Fraud detection

In financial and operational risk management, generative AI can be used to detect anomalies and patterns associated with fraud. This is particularly valuable in industries prone to fraudulent activities, such as finance and insurance.

5. Risk reporting

Generative AI can streamline the creation of risk assessment reports, ensuring that they are generated consistently and accurately. This helps in presenting a clear picture of potential risks to stakeholders, facilitating risk communication and management.

6. Supply chain risk management

Generative AI can analyze supply chain data and external factors like geopolitical events and economic indicators to identify vulnerabilities and disruptions in the supply chain. This enables organizations to take proactive measures to mitigate supply chain risks.

7. Regulatory compliance for risk management

In heavily regulated industries, generative AI can assist in ensuring that risk management practices are in line with evolving compliance requirements. It can automate the process of documenting risk assessments, risk mitigation plans, and regulatory compliance reports.

8. Natural Language Processing (NLP) for risk documents

Generative AI’s NLP capabilities can be applied to analyze legal and regulatory texts related to risk management. It helps in extracting relevant information from complex documents, ensuring that organizations stay compliant and well-informed about regulatory changes affecting risk management.

How does generative AI facilitate compliance?

Generative AI offers several ways to boost compliance within the GRC framework. It not only improves compliance efficiency but also reduces the risk of compliance-related issues and penalties. Here’s a look at some of the ways in which generative AI can boost compliance.

1. Automated documentation

Generative AI tools can streamline the creation of compliance documents, such as policy manuals, audit reports, and regulatory filings. It automates the generation of these documents, ensuring that they are consistently produced with high precision. This reduces the risk of human error in compliance-related documentation.

According to an IBM report, 42% of GRC professionals believe that the substantial impact of AI will be on data validation for regulatory reporting.

2. Regulatory monitoring

Generative AI continuously scans and summarizes regulatory changes, enabling GRC teams to stay up-to-date with the latest compliance requirements. This proactive approach ensures that organizations can adapt their compliance strategies and policies swiftly in response to evolving regulations.

3. Natural Language Processing (NLP)

Generative AI with NLP capabilities can be used to analyze complex legal and regulatory texts. It extracts relevant information, tracks changes in regulations, and assesses their impact on the organization’s compliance status. This can save time and ensure that no critical compliance details are missed.

4. Data privacy compliance

In industries subject to data privacy regulations like GDPR or CCPA, generative AI can assist in data discovery and classification. It helps organizations generate privacy-related documentation, such as data processing agreements, and ensures that they align with data protection laws.

5. Policy and procedure compliance

Generative AI can help organizations maintain internal policies and procedures in compliance with regulatory changes. It automates policy management, ensuring that the organization adheres to the latest compliance standards consistently.

6. Audit support

Generative AI can assist in the preparation of audit-related documentation and reports, making the audit process more efficient. It helps organizations demonstrate their adherence to compliance requirements, simplifying the auditing process.

7. Employee training

Generative AI can generate training materials and e-learning modules for compliance training. It ensures that employees are well-informed about compliance regulations and internal policies, reducing the risk of compliance breaches due to lack of awareness.

8. Contract compliance

Generative AI can assist in contract analysis and management, ensuring that contracts adhere to compliance standards. It helps identify clauses that may pose compliance risks and provides recommendations for contract revisions.

Benefits of using AI In GRC

The generative AI landscape within GRC is paving the way for automated compliance documentation and risk identification, streamlining critical aspects of governance, risk, and compliance management.

Incorporating generative AI into your GRC program can significantly improve efficiency, accuracy, and security while ensuring compliance with industry standards and regulations.

It empowers GRC teams to focus on strategic tasks and decision-making, ultimately strengthening your organization’s overall security and compliance posture. Here are some generative AI use cases in GRC.

A. Enhances compliance documents

Generative AI possesses the capability to not just review but also enhance your existing compliance documents. These AI systems, including ChatGPT, are adept at identifying errors or areas of improvement in your governance framework.

They can help by pinpointing outdated or inconsistent policies, highlighting vague language, and suggesting revisions to create more effective, up-to-date, and comprehensive compliance documents.

By harnessing generative AI for this purpose, GRC teams can ensure that their policies and procedures align with current regulatory standards, thereby reducing compliance risks.

B. Addresses inconsistencies

Generative AI tools are invaluable in identifying inconsistencies and gaps between policies. These discrepancies often arise when different authors draft policies or updates occur at different times. Generative AI can detect these variations and align the entire compliance framework.

By pinpointing contradictions and missing links, AI ensures that your policies are coherent and logically structured, which is essential for maintaining a unified approach to governance and achieving compliance with legal and industry standards.

C. Identifies missing controls

Large language models (LLMs) employed in generative AI have the capability to thoroughly review your documentation and identify controls that are either missing or need enhancement. By performing this critical function, they assist in creating a more robust and comprehensive compliance framework.

The identification of missing controls is essential for minimizing security vulnerabilities, thereby reducing potential risks and ensuring that all aspects of governance and compliance are adequately addressed.

D. Automates audits

Generative AI can streamline and automate the audit process, a crucial aspect of GRC. By training LLMs to comprehend your internal data, you can enable auditors to rapidly access specific information and evidence without disrupting your team’s workflow.

This automation proves particularly useful for gathering audit data from various sources, such as project management tools like Jira or Asana, document storage platforms like Google Docs, and version control systems like GitHub.

Not only does this save time for your team, but it also improves the overall audit experience for both your internal team and external auditors. It enhances efficiency and ensures a smoother audit process.

E. Limits external access

External audits necessitate that external parties access your data. To enhance data security, it is crucial to restrict their access only to the information necessary for the audit. Generative AI is instrumental in controlling and limiting the data made accessible to external auditors.

This approach aligns with the principle of least privilege, ensuring that auditors only view the essential information required for their review. It strengthens the security of your sensitive data during the audit process.

F. Builds customer trust

An organized Trust Vault, coupled with the capabilities of LLMs, can foster customer confidence in your organization. A Trust Vault is essentially a repository of organized and

verified information that underscores your commitment to data security and compliance.

Well-structured documentation and a transparent approach to data security play a pivotal role in building trust with your customers. Generative AI enables you to efficiently manage and present this information, assuring your customers that their data is safeguarded. It not only saves time for your team but also enhances customer trust.

G. Auto-generates content

Generative AI facilitates the auto-generation of content, a valuable capability for creating customized white papers and efficiently completing security questionnaires. These tools enable you to quickly generate content tailored to the specific needs of different customers.

Whether it involves customizing security white papers to address different industries or adapting security questionnaires to specific requirements, generative AI streamlines content creation and enhances efficiency. It saves time and ensures that your content is well-suited to your audience.

What to watch out for when using generative AI in GRC?

When using generative AI in GRC, it’s essential to be aware of potential pitfalls and considerations to ensure responsible and effective implementation. Here’s what to watch out for:

A. Accuracy and hallucinations

Generative AI, while powerful, can sometimes produce responses without a factual basis. Always review and validate the information generated before relying on it for decision-making or reporting. Incorrect or misleading data can lead to compliance issues or misinformed risk assessments.

B. Bias and relevance

Generative AI relies on historical data, which may contain biases or outdated information. Ensure that the AI-generated content aligns with current regulatory standards and organizational policies. Watch out for any biased language or assumptions that might not be suitable for your compliance needs.

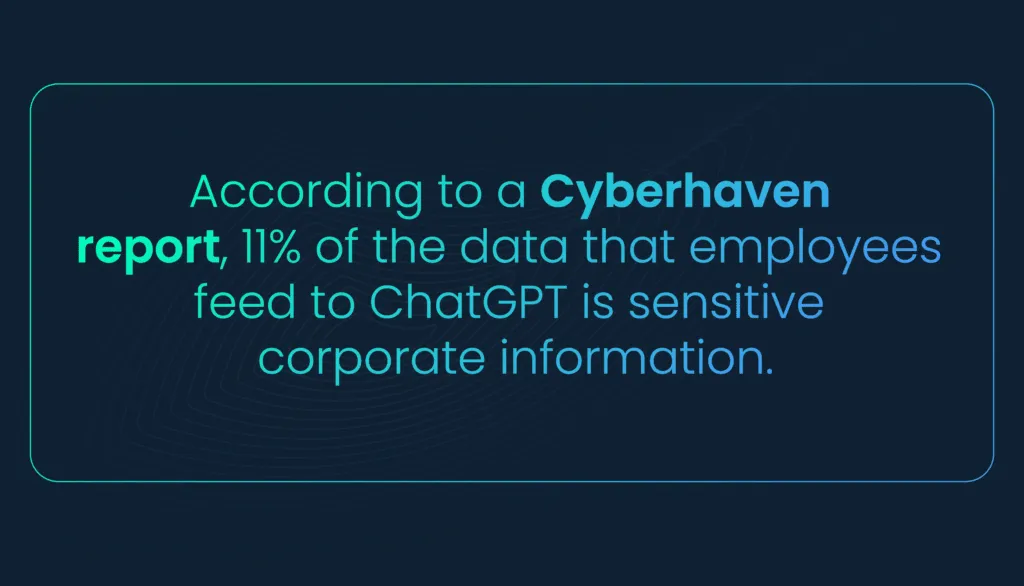

C. Data privacy and security

Generative AI may capture and store the data you input into the model. Be cautious about sharing sensitive or confidential information outside your organization. Establish clear usage guidelines and data privacy parameters to protect against potential breaches and unauthorized access to sensitive data.

D. Lack of source citations

Generative AI typically doesn’t cite sources for the information it generates. This makes it challenging to verify the reliability and credibility of the content. Exercise due diligence by cross-referencing AI-generated data with trusted sources to ensure accuracy and adherence to regulatory requirements.

E. Overreliance on AI

While generative AI is a valuable tool, avoid overrelying on it. GRC processes require human expertise and judgment to interpret complex regulatory changes, assess risks, and make important compliance decisions. Generative AI should support, not replace, human decision-making.

F. Ethical and legal concerns

Be mindful of ethical considerations when using AI in GRC. Ensure that AI use complies with ethical guidelines, industry standards, and legal requirements, particularly in cases related to data privacy, discrimination, and fairness.

G. Regular model updates

Generative AI models evolve and require updates to stay current with changing regulations and industry standards. Keep track of model updates and ensure that your AI system is aligned with the most recent compliance requirements.

Best practices for implementing generative AI in GRC

Generative AI tools themselves do introduce a host of privacy and security issues themselves. The following practices will help you use them productively and wisely.

1. Data minimization

Limit the amount of sensitive data you input into generative AI models. Only share the information necessary for your specific GRC task and avoid providing excess data that could pose security or privacy risks.

2. Use of pseudonyms

Instead of using real names or specific identifying information, use pseudonyms or generic identifiers when inputting data into the AI model. This helps protect the privacy of individuals and sensitive information.

3. Encryption

Ensure that data shared with the generative AI system is transmitted and stored securely using encryption protocols. This safeguards data from interception and unauthorized access.

4. Secure access controls

Restrict access to the generative AI system to authorized personnel only. Implement strong authentication and authorization controls to prevent unauthorized users from interacting with the AI model.

5. Data retention policies

Establish clear data retention and deletion policies. Regularly review and remove unnecessary data from the AI system to reduce the risk of data breaches or unauthorized access.

6. Secure communication

Use secure communication channels and protocols when interacting with the generative AI model. This includes secure connections and VPNs for remote access.

7. Regular auditing and monitoring

Continuously monitor and audit AI-generated content and data interactions to detect any anomalies or unauthorized access. Implement automated monitoring systems to flag suspicious activities.

8. Compliance with regulations

Ensure that your use of generative AI aligns with data privacy and security regulations, such as GDPR or HIPAA, as relevant to your industry. Comply with the requirements for data handling and privacy under these regulations.

9. Privacy impact assessments

Conduct Privacy Impact Assessments to evaluate the potential privacy risks associated with using generative AI. Address identified risks and implement safeguards accordingly.

10. Ethical AI practices

Promote ethical AI practices within your organization to ensure that generative AI models are used responsibly and that biases are minimized.

11. Regular updates and patching

Keep the generative AI system and related software up to date with the latest security patches and updates to address vulnerabilities and potential security risks.

12. Training and awareness

Train your GRC team and users on security best practices when interacting with generative AI. Ensure that they are aware of the potential risks and know how to handle sensitive data.

13. Implementing generative AI in your GRC strategy

As organizations recognize the potential of generative AI in GRC, it’s crucial to have a structured approach to seamless integration. Here are practical steps and best practices to guide you through the process:

14. Define clear objectives

Begin by outlining your specific objectives for using generative AI in GRC. What are the key challenges you aim to address, and what outcomes do you expect to achieve? Having well-defined goals will guide your implementation strategy.

15. Select the right generative AI model

Choose a generative AI model that aligns with your GRC needs. Consider factors such as the model’s capabilities, customization options, and the compatibility of data sources.

16. Data preparation

Ensure your data is well-structured and organized. Clean and prepare the data you intend to feed into the AI model. This step is critical for accurate results.

17. Pilot testing

Before full-scale implementation, conduct pilot tests to assess the AI model’s performance. Use a small dataset to evaluate the model’s accuracy and suitability for your GRC requirements.

18. Customization

Tailor the AI model to meet your organization’s unique GRC needs. Customize it to generate content that aligns with your compliance standards, industry regulations, and corporate culture.

19. Training and Education

Provide training to GRC professionals and employees who will interact with the generative AI system. Ensure that they understand how to use the tool effectively and responsibly.

20. Data privacy and security protocols

Implement robust data privacy and security measures. Define access controls and encryption protocols to safeguard sensitive information. Create a clear policy on data handling and storage.

21. Regular monitoring and auditing

Continuously monitor the AI system’s output and user interactions. Set up automated auditing processes to identify and rectify any anomalies, biases, or inaccuracies.

22. Ethical guidelines

Establish ethical guidelines for AI use in GRC. Promote responsible AI practices and ensure that the AI system adheres to these principles in content generation.

23. Compliance with regulations

Ensure that your use of generative AI complies with relevant data protection regulations (e.g., GDPR, HIPAA) and industry-specific standards. Seek legal counsel if needed to verify compliance.

24. Cross-referencing and verification

Cross-reference AI-generated content with trusted sources and manually verify critical information. This step is vital for ensuring data accuracy and credibility.

25. Feedback mechanisms

Establish feedback mechanisms for GRC professionals to report any concerns or inaccuracies in AI-generated content. Use this feedback to fine-tune the AI model.

26. Documentation and reporting

Maintain detailed records of your AI implementation, including customization settings and usage history. This documentation can be valuable for audit purposes.

27. Continuous improvement

Embrace a culture of continuous improvement. Regularly assess the AI system’s performance and seek opportunities to enhance its capabilities and accuracy.

28. Collaboration and communication

Foster collaboration between GRC teams and IT departments. Maintain open communication channels to address any technical issues or AI-related concerns effectively.

Summing up

As we’ve explored throughout this blog, generative AI offers a game-changing advantage, redefining how organizations approach their GRC strategies.

From governance efficiency and risk management precision to proactive compliance and real-time data analysis, generative AI tools empower GRC teams to navigate the intricate regulatory terrain with newfound agility and confidence. It is the tool that bridges the gap between intricate regulations and streamlined operations.

So, why should you use generative AI in GRC? The answer is clear: because it enables you to amplify your GRC efforts, ensuring that your organization stays ahead of the curve, remains compliant, and manages risks effectively. With generative AI by your side, you’re not just managing GRC; you’re optimizing it for a brighter and more secure future.

If you are interested in using generative AI to boost your organization’s GRC, make sure you schedule a demo with Scrut today!

FAQs

1. What is generative AI, and how does it differ from other AI technologies?

Generative AI is a subset of artificial intelligence that specializes in creating new data, content, or responses. It differs from other AI technologies like predictive AI, which forecast outcomes based on existing data. Generative AI excels in tasks that involve creativity and content generation.

2. How can generative AI enhance governance in GRC?

Generative AI streamlines policy creation, documentation, and reporting, making governance more efficient. It ensures consistency and precision in governance documents and can proactively identify trends and areas for improvement.

3. What are the advantages of using generative AI in risk management for GRC?

Generative AI aids risk management by analyzing large datasets for early risk identification, improving accuracy in risk assessments, and enhancing predictive capabilities. It helps organizations become more proactive in risk mitigation.

4. How does generative AI benefit compliance in GRC?

Generative AI automates compliance documentation, ensuring that it is accurate and up-to-date. It helps organizations respond proactively to regulatory changes, saving time and resources. It is also valuable for data privacy compliance and contract analysis.

5. What are the challenges associated with using generative AI in GRC?

Challenges include ensuring data accuracy, managing potential bias, addressing data privacy concerns, and the need for manual verification of AI-generated content. Ethical considerations and keeping up with regulatory changes are also important.

6. How can organizations integrate generative AI into their GRC strategy?

Organizations can integrate generative AI by defining clear objectives, selecting the right AI model, customizing it to their needs, and providing training to GRC professionals. They should also establish data privacy and security protocols, regularly monitor and audit the AI system, and ensure compliance with relevant regulations.