Continuous risk management strategies for AI advancements

Artificial Intelligence (AI) technology has rapidly evolved over the past few years, becoming a cornerstone of modern businesses and industries. Its applications range from data analysis and automation to enhancing decision-making processes. This evolution has led to a dynamic AI landscape that continually introduces new challenges and opportunities.

As AI becomes more integrated into various aspects of organizations, the importance of continuous risk management has grown significantly. AI systems can be vulnerable to various threats, including data breaches and cyberattacks. Organizations must proactively address these risks to ensure the security and integrity of their AI-powered solutions.

In this context, as security and compliance experts, you play a crucial role in navigating the complex AI landscape. You are responsible for ensuring that AI systems adhere to regulatory requirements and industry standards, particularly in areas like data security and privacy. You help organizations establish robust security protocols, monitor compliance, and respond effectively to emerging threats.

Now, let's delve into the role of AI in enhancing data security and compliance in the dynamic landscape, drawing insights from the provided search results.

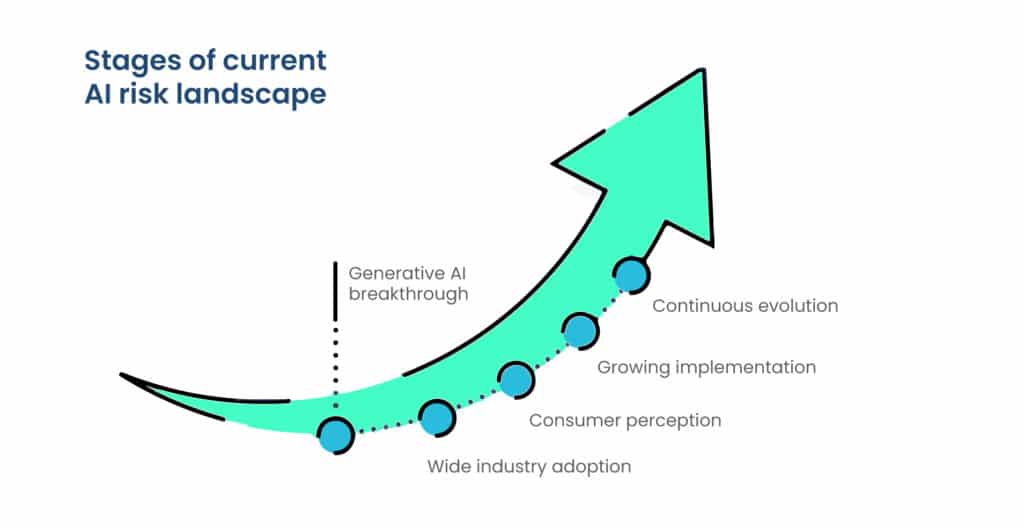

Understanding the current AI risk landscape

AI has reached a pivotal point in its development, with several notable trends and advancements characterizing its current state:

1. Generative AI breakthrough

Generative AI has emerged as a key focus in 2023, reshaping industries. This technology allows AI systems to create content autonomously, leading to innovations in creative fields, content generation, and more.

2. Wide industry adoption

Big companies are actively implementing AI strategies. It has become evident that AI is not limited to a specific sector; instead, it spans across various industries, including healthcare, finance, and manufacturing.

3. Consumer perception

Most consumers believe in the potential of AI, recognizing its role in enhancing various aspects of life. This positive perception further fuels the integration of AI into everyday applications.

4. Growing implementation

AI is no longer just a concept for the future. The global Artificial Intelligence market size was valued at USD 59732.12 million in 2022 and is expected to expand at a CAGR of 47.26% during the forecast period, reaching USD 609038.96 million by 2028.

5. Continuous evolution

The AI and Data Science landscape remains dynamic, with new trends and technologies emerging every year. Staying updated with the latest developments is crucial for businesses and professionals in the field

What are the potential risks and vulnerabilities in AI systems?

Security risks and vulnerabilities in AI systems can vary depending on the specific application and implementation of the technology. However, here are some common types of security risks and vulnerabilities associated with AI systems:

Data privacy risks

Adversarial attacks

Model vulnerabilities

Transfer learning risks

Data poisoning

Explainability and interpretability

Infrastructure and deployment

Supply chain risks

Insider threats

Ethical and regulatory risks

To mitigate these security risks and vulnerabilities, organizations must adopt robust security practices, conduct thorough testing, implement proper access controls, and stay informed about emerging threats and best practices in AI security.

Real-world examples of AI-related security incidents

AI-related security incidents highlight the vulnerabilities associated with artificial intelligence systems. Here are some real-world examples:

1. Self-driving car vulnerabilities

Autonomous vehicles rely heavily on AI for navigation and decision-making. Security researchers have demonstrated vulnerabilities that could allow malicious actors to manipulate self-driving car systems, potentially leading to accidents or unauthorized control of vehicles (BelferCenter).

2. AI-generated deep fakes

AI-driven deepfake technology has been used to create convincing forged videos and audio recordings. These have been exploited for various malicious purposes, including spreading misinformation and impersonating individuals for fraud (BelferCenter).

3. AI-enhanced cyberattacks:

Cybercriminals leverage AI to enhance the sophistication of their attacks. AI can be used for rapid data analysis, evasion of security measures, and targeting specific vulnerabilities. This has led to more potent and adaptive cyber threats (Malwarebytes).

4. AI-driven phishing

AI-powered phishing attacks are becoming more convincing. Attackers use AI algorithms to craft highly personalized and convincing phishing emails, increasing the likelihood of successful attacks and data breaches (Malwarebytes).

5. Insider threat detection

While AI can enhance insider threat detection, there have been instances where employees with privileged access have exploited their knowledge of AI systems to steal sensitive data or manipulate AI algorithms for malicious purposes. Such incidents highlight the need for robust access controls and monitoring.

6. AI-enhanced social engineering

Attackers use AI to automate and enhance social engineering techniques, making it easier to trick individuals into divulging sensitive information or clicking on malicious links. AI can analyze vast amounts of data to craft convincing social engineering attacks (Malwarebytes).

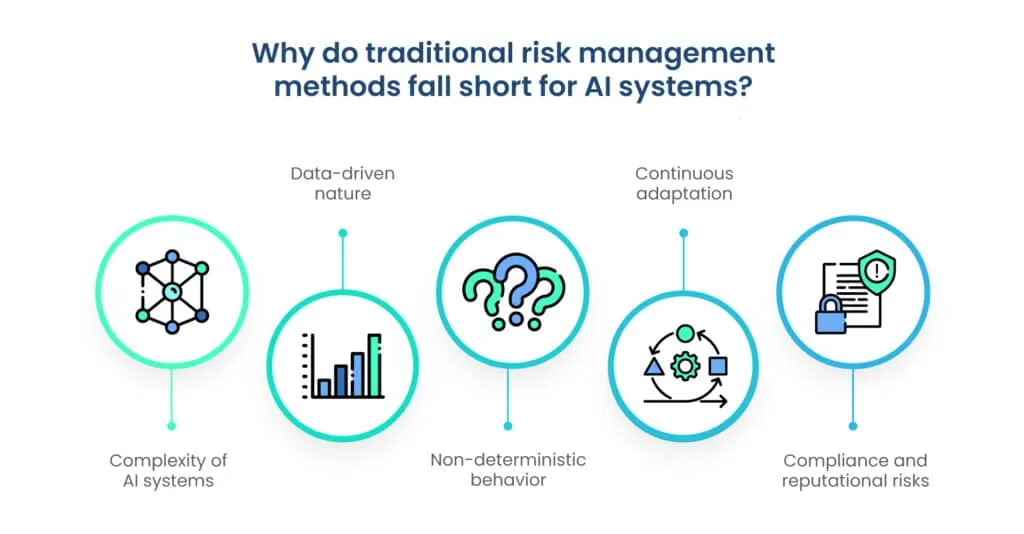

Drawbacks of traditional risk management methods for AI systems

Traditional risk management approaches may fall short in the AI context due to several reasons:

1. Complexity of AI systems

AI systems are often highly complex and dynamic, making them challenging to assess and manage using traditional risk management methods. The rapid evolution of AI algorithms and technologies requires continuous monitoring and adaptation.

2. Data-driven nature

AI heavily relies on data, and the quality of the data used can introduce significant risks. Traditional risk management may not adequately address data-related risks, such as bias in training data or data breaches.

3. Non-deterministic behavior

AI systems can exhibit non-deterministic behavior, making it challenging to predict and mitigate risks. Traditional risk management is typically designed for deterministic systems and may not account for AI's unpredictability.

4. Continuous adaptation

AI models learn and adapt over time. Traditional risk management cannot often continuously monitor and adjust to the evolving nature of AI systems, leaving organizations vulnerable to emerging risks.

5. Compliance and reputational risks

AI systems can pose compliance and reputational risks. Traditional risk management functions may struggle to keep up with the regulatory landscape and the potential fallout from AI-related controversies.

The dynamic relationship between AI and integrated risk management

The dynamic nature of AI, characterized by the components given below, makes ongoing risk assessment a critical component of AI applications. It ensures that AI systems remain effective, secure, and aligned with organizational objectives in a constantly evolving environment.

1. Continuous learning

AI systems, such as machine learning models, continuously learn from new data. This ongoing learning introduces the risk of model drift, where the model's performance may degrade over time. Regular AI risk assessments are essential to monitor and address model drift to maintain accurate predictions.

2. Changing threat landscape

In cybersecurity, AI-driven risk assessments are vital because the threat landscape is constantly evolving. New cyber threats and vulnerabilities emerge regularly, and AI can help detect and respond to these threats in real-time. Continuous risk assessment allows organizations to adapt to these changing threats.

3. Adaptive risk management

AI can play a role in adaptive risk management, where risk assessments are updated dynamically based on real-time data and events. This approach enables organizations to respond quickly to emerging risks and make informed decisions to mitigate them.

4. Improved decision-making

AI can analyze vast amounts of data and identify patterns that may not be apparent through traditional methods. Continuous risk assessment powered by AI provides organizations with more accurate and timely insights, enhancing decision-making and risk mitigation strategies.

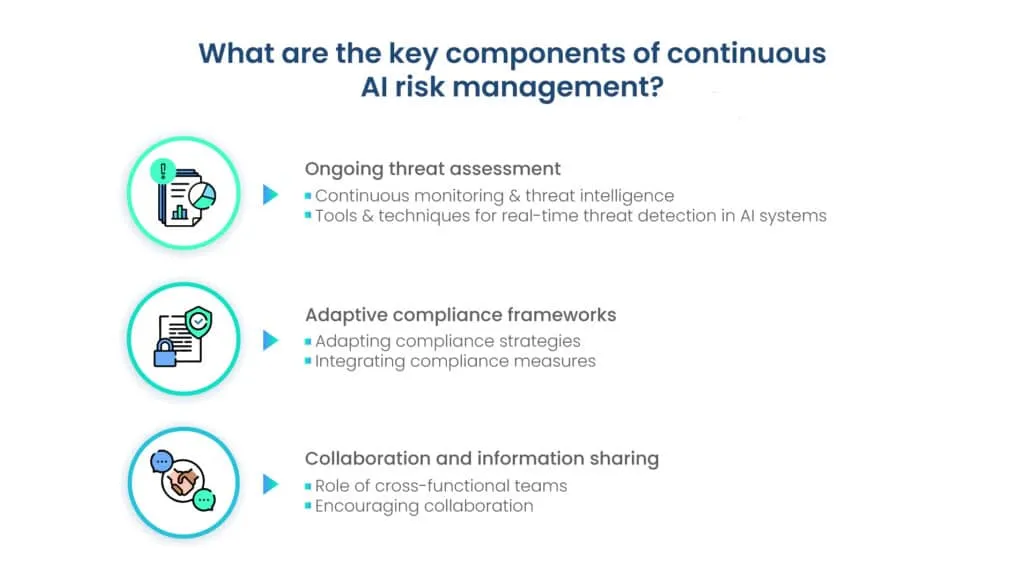

Key components of continuous AI risk management

Continuous AI risk management for AI systems involves several key components that help maintain security and compliance. Here's a breakdown of these components:

1. Ongoing threat assessment

a. Continuous monitoring and threat intelligence

Continuous monitoring of AI systems is crucial to detect and respond to potential threats in real-time. Threat intelligence involves collecting and analyzing data to identify emerging threats and vulnerabilities. This helps in staying ahead of evolving risks.

b. Tools and techniques for real-time threat detection in AI systems

Utilizing advanced tools and techniques, such as machine learning algorithms and anomaly detection, to identify suspicious activities or deviations from normal behavior in AI systems. Real-time threat detection helps in immediate response to potential risks.

2. Adaptive compliance frameworks

a. Adapting compliance strategies

Compliance strategies must be flexible and adaptable to evolving AI standards and regulations. As AI technologies and regulations change, compliance measures should be updated accordingly to ensure adherence to legal requirements. Automated GRC solutions have been developed using AI and ML in modern times.

b. Integrating compliance measures

Compliance should be integrated into the entire AI development lifecycle. This includes compliance checks during the design, development, testing, and deployment phases. It ensures that AI systems are compliant from the beginning and throughout their lifecycle.

3. Collaboration and information sharing

a. Role of cross-functional teams

Effective risk management in AI systems requires collaboration among cross-functional teams. Security, development, and compliance teams must work together to identify, assess, and mitigate risks. Each team brings unique expertise to the table, enhancing the overall security and compliance efforts.

b. Encouraging collaboration

Creating a culture of collaboration between these teams is essential. Encouraging open communication, sharing threat information, and fostering a sense of shared responsibility for security and compliance contribute to more effective risk management

AI risk management framework

The AI Risk Management Framework, developed by the National Institute of Standards and Technology (NIST), is a comprehensive approach to guide organizations in managing the risks associated with AI systems. Here's an overview:

1. Collaboration

NIST has collaborated with both the private and public sectors to create this framework, emphasizing a collective effort to better manage risks related to AI.

2. Flexibility

The NIST AI Risk Management Framework is designed to be flexible, allowing it to adapt to different organizations' specific requirements and needs.

3. Lifecycle approach

It focuses on managing risks across the entire AI lifecycle, covering design, development, deployment, and ongoing monitoring and evaluation of AI systems.

4. Responsible AI

The framework outlines characteristics for achieving responsible use of AI systems. These characteristics include ensuring AI systems are valid, reliable, accountable, and more.

5. Voluntary use

The NIST AI Risk Management Framework is designed for voluntary use by organizations and individuals, providing approaches to enhance AI risk management practices.

6. Adaptation

Organizations can adapt this framework to suit their unique AI projects and requirements, making it a versatile tool for managing AI-related risks.

7. Compliance

The framework is intended to help organizations comply with relevant regulations and standards, ensuring AI systems meet the necessary security and ethical criteria.

Best practices for security and compliance experts

Here are the best practices for security and compliance experts to stay ahead in the rapidly evolving landscape of AI:

1. Recommendations for staying informed about AI advancements

- Continuous learning: Security and compliance experts should invest in ongoing education to keep up with AI advancements. This includes attending AI-related conferences, webinars, and workshops to stay updated on the latest trends and technologies.

- Engage with AI communities: Join AI-focused online communities, forums, and social media groups where experts share insights, research, and news. This engagement can help professionals access real-time information and discussions about AI developments.

- Monitor industry publications: Regularly follow reputable publications, research papers, and blogs dedicated to AI, cybersecurity, and compliance. Subscribing to newsletters and setting up alerts for relevant keywords can help professionals stay informed.

2. Developing skills and expertise in AI-related security and compliance

- Specialized training: Enroll in AI-specific training programs and certifications related to cybersecurity and compliance. These programs provide in-depth knowledge and practical skills required for AI security and compliance roles.

- Cross-disciplinary knowledge: AI intersects with various fields, including data science, ethics, and legal regulations. Security and compliance experts should acquire cross-disciplinary knowledge to address the multifaceted challenges of AI.

- Hands-on experience: Gain practical experience by working on AI projects or simulations. This hands-on approach enhances expertise and the ability to apply security and compliance measures effectively.

3. Building a proactive and adaptive risk management strategy

- Continuous risk assessment: Implement continuous risk assessments that consider AI system lifecycles. Regularly evaluate potential threats, vulnerabilities, and compliance requirements throughout AI development and deployment.

- Adaptive compliance framework: Develop an adaptive compliance framework that can swiftly respond to changing regulations and standards. This framework should integrate compliance measures into AI development processes.

- Collaboration: Foster collaboration between security, compliance, and AI development teams. Cross-functional teamwork ensures that security and compliance considerations are embedded in AI projects from the start.

Final thoughts

In conclusion, as Artificial Intelligence (AI) rapidly integrates into industries, continuous and adaptive risk management is essential. The dynamic AI landscape, marked by breakthroughs and widespread adoption, introduces vulnerabilities seen in incidents like self-driving car flaws and AI-driven cyberattacks.

Traditional risk management falls short of AI complexities. Ongoing threat assessment, real-time monitoring, adaptive compliance, and cross-functional collaboration are vital. Staying ahead of risks is key for AI security and compliance. Experts should prioritize continuous learning, engage with AI communities, and develop specialized skills.

In the evolving AI landscape, a proactive AI risk management strategy, coupled with professional expertise, is crucial for responsible and secure AI integration.

FAQs

1. Why is continuous risk management crucial for organizations integrating Artificial Intelligence (AI)?

Continuous risk management is vital for navigating the dynamic AI landscape, addressing vulnerabilities, and safeguarding against potential threats such as data breaches and cyberattacks.

2. How do security and compliance experts contribute to AI risk management?

Security and compliance experts play a crucial role in ensuring AI systems adhere to regulatory standards, establish robust security protocols, and monitor compliance to prevent security breaches and data compromises.

3. What are the key components of continuous risk management for AI systems?

Ongoing threat assessment, real-time monitoring, adaptive compliance frameworks, and cross-functional collaboration are key components of continuous risk management, ensuring AI systems remain secure and compliant in a rapidly evolving environment.